If You Want To Learn NLP, Start here: An Introduction to Natural Language Processing (NLP)

If you’re a newbie and want some solid NLP foundation? This is the best place to start. Learn the basics of NLP here and get started!

If you want to learn about Natural Language Processing, this post is a roadmap to help you.

Introduction:

What is Natural Language Processing?

Don't worry, I’ll break this down for you in clear, concise language.

Imagine a computer that continuously understands what you say and does exactly what you want. Now picture how natural it feels to speak to your computer. It’s almost as if you’re talking to another human being, but even better! Because the computer has a mind of its own. You tell your computer what you want, and it figures out how to do it for you. However, for all of this to happen, we first need our computers to understand our language. And the process of teaching them this language is called Natural Language Processing (NLP).

Sounds simple, right? Of course, the devil's in the details.

Let’s first look at the definition of NLP (according to Wikipedia) before we dig deeper.

- Natural Language Processing: Natural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves.

We are using computers to learn to do the same things that people do when they read and understand sentences. However, computers need a lot of practice before they get good at finding patterns in what they see. When we teach them this, we give them a lot of examples that have certain rules associated with them. If we give them enough examples, they will be better at finding patterns in these examples and given enough time, they will be more efficient than humans at doing common job tasks.

Let’s look at some of the examples of NLP around us.

NLP Around Us:

There are countless ways that NLP influences us today, but it's usually not apparent. Let’s look at how we use NLP daily without us realizing it:

- Filtering Emails: You see how our emails are automatically marked as spam, while the others land up in our inbox? Also, how the emails are segregated into “Primary”, “Social” and “Promotions” categories (in Gmail)? This all is the work of NLP. After training the algorithm with thousands of email samples each month, the model can then efficiently remove spam, malicious, and phishing emails from your inbox before they even reach you, while also filtering the non-spam emails into the above 3 categories. This is done using the integration of computer science and Artificial Intelligence (AI).

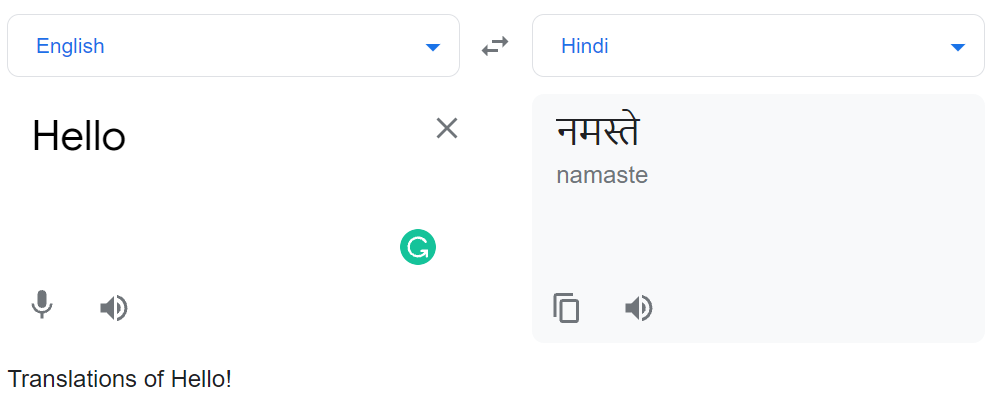

- Language Translation: For a long time, it seemed rather impossible to translate one language to another without losing the whole meaning in the process. Fast forward to the present, and Google Translate has changed the entire game of language translation. The whole process is possible through NLP.

By using millions of examples, NLP improves the quality of translation. It uses broader context to get the most relevant translation. The result is then rearranged and adapted to approach the correct grammar as well.

- Voice text messaging: Voice-Text messaging is a new alternative to texting. I’m sure many of us have already used it on our phones or while looking something up on Google. If yes, then you already are using NLP and don’t even realize it. Voice-Text messaging will make it much easier for you to get more done in less time. You can ask questions, respond to notifications quickly, and set reminders with your voice all at the same time.

- Smart Assistants: Without NLP, smart assistants would not be what they are today. These include Siri, Alexa and Cortana.

NLP, Natural Language Understanding (NLU) and Natural Language Generation (NLG) power the most advanced virtual assistants today.

Let’s first understand what each of these terms mean.

-

NLP - As discussed above, NLP is programming a computer to understand and analyze human language. When it comes to building smart assistants, NLP breaks down the spoken language into parts of speech and word stems.

-

NLU - Next comes Natural Language Understanding (NLU), when the spoken language is already broken down, NLU allows machines to understand this language. In simpler words, NLU helps the machine to understand the data. It interprets this input data and tries to understand the meaning. It does so by understanding the context, semantic (checks the meaning of the text), syntax (understands sentences and phrases and checks grammar), intent, and sentiment of the text.

-

NLG - This is the last step when it comes to building smart assistants. Natural Language Generation (NLG) is the part that gives machines the ability to “communicate” either through speech or text. It uses artificial intelligence (AI) programming to produce written or spoken narratives from a data set.

Some of the other daily examples of NLP are Accurate Writing tools like Grammarly, Predictive texts (while emailing/texting on call, autocorrect and autocomplete), Chatbots and more.

Now that you know how NLP is used, you will see that there are many advantages to using it. Let’s look at some of them below.

Advantages:

Natural Language Processing (NLP) has many clear advantages.

Let’s review some of them below.

- Chatbots: Using data to make the best business decisions is a requirement. But finding relevant information quickly and easily shouldn’t feel like work. That's where NLP chatbots come into play. They increase productivity by collecting data from multiple sources and saving time. It has done wonders for customer service because you’re available immediately. There are many more advantages of using chatbots.

- Easier to Process Forms or Compile Surveys: Computers are unable to process and analyze large amounts of text-based information. However, there are special NLP technologies that can be used to improve accuracy. Using NLP saves time and increases productivity by automating repetitive tasks, such as compiling surveys or processing forms.

- Helpful in Recruitment Process: Forget going through stacks of paper resumes. By employing Natural Language Processing (NLP) applications you identify candidates with the best qualifications. It can recognize desired characteristics of the job candidate faster than humans. This screening process eliminates human error and biases, saving time and money for both employers and employees.

- Sentiment Analysis/ Opinion mining/ Emotion AI: Customers are expressing their thoughts and feelings more openly now. There is no better way to make sense of these feedbacks than to leverage sentiment analysis. Sentiment analysis is the process of detecting positive or negative sentiment in text. We can analyze customer sentiments faster and more accurately now in online surveys, comments and reviews by using simple NLP algorithms.

- Time-Saving and Cost-Efficient: Using NLP, you can eliminate repetitive functions to free up your employees for higher-level tasks. The process is time-saving and cost-efficient. This can lead to increased productivity, engagement and overall better performance of the employees and the company as a whole.

Challenges:

While NLP is great for improving workflow, there are several challenges to bear in mind.

There are thousands of diverse languages in the world. These languages can easily be understood by a human brain without much hassle. However, when it comes to machines, this same task becomes rather complex, which in turn presents a challenge for machines to interpret the vast amount of data being fed to them to analyze and in turn, speak or write.

If we just talk about the English language, there are many ambiguities involved in the same, which makes it a complex language to understand by the computer alone.

For example, let’s look at the sentence below.

“The professor said on Monday he would give an exam”

The above sentence can have two meanings, either on Monday the professor told them he would give an exam or that the exam would be given on Monday.

Another ambiguity arises when we deal with words with the same spellings and pronunciations but two different meanings (Homonyms) depending on the context.

For example,

“The dog does not bark much”

“The tree has a rough bark”

Here, the word “bark” has 2 different meanings based on the context.

These ambiguities are easier for the human mind to understand. However, when it comes to machines, these can create some problems.

Some of the other problems machines face while interpreting languages are irony and sarcasm, error in the text, synonyms, slangs and more.

To overcome these, machines are fed billions of pieces of training data. The more the data, the better the model performs. As the field develops, we will probably see more practical solutions to some of these challenges.

Techniques Used In NLP:

The two main techniques used in NLP are Syntactic Analysis and Semantic Analysis.

Let’s first understand the meaning of the terms “Syntax” and “Semantics” in simple terms.

-

Syntax: It refers to the rules of grammar in sentence structure—the way that words are ordered to form sentences.

-

Semantics: It refers to the meaning of a sentence.

There can be times when a sentence is syntactically correct, however, semantically incorrect. For example, consider the sentence, “Colorless green ideas sleep furiously.” In this sentence, each word is grammatically appropriate but has no useful meaning.

Both syntax and semantics are important to construct valid sentences in any language, and the same goes for NLP also. Therefore, both these techniques, i.e., Syntactic Analysis and Semantic Analysis are equally important for NLP.

Let's move on further to understand what Syntactic and Semantic Analysis are:

- Syntactic Analysis: It is also known as syntax analysis or parsing. It is the process of analyzing natural language with the rules of grammar and see how well they align. Sentences are broken down into groups of words and not individual words for syntactic analysis. In simpler terms, syntactic analysis tells us whether a sentence conveys its logical meaning or not.

- Semantic Analysis: Semantic analysis is the process of analyzing the context in the surrounding text and text structure to accurately describe the proper meaning of words that have more than one definition. This analysis gives the power to computers to understand and interpret the human language by identifying the connection between individual words of the sentence in a particular context.

It’s also important to note that semantic analysis is one of the toughest challenges of NLP.

NLP Pipeline:

When we train any model, we try to break down complex problems into a number of small problems, build models for each and then integrate them. The same procedure is followed while dealing with NLP. We try to break down the process of understanding the English language into small pieces, build models for each and integrate them all. This is how we build a pipeline.

Let’s try to understand each step in detail.

Step 1: Sentence Segmentation

Sentence segmentation, as the name suggests, simply means breaking the paragraph in various sentences.

For example,

Input:

My family is very important to me. We do lots of things together. My father teaches mathematics, and my mother is a nurse at a big hospital. My brothers are very smart and work hard in school. My sister is a nervous girl, but she is very kind. My grandmother also lives with us.

Output:

My family is very important to me.

We do lots of things together.

My father teaches mathematics, and my mother is a nurse at a big hospital.

My brothers are very smart and work hard in school.

My sister is a nervous girl, but she is very kind. My grandmother also lives with us.

Step 2: Word Tokenization

In this step, we break the output sentences into individual words called “Tokens”. We can tokenize them whenever we find a space between 2 words or characters. Punctuation marks are considered as tokens as well, since they hold meaning too.

For example,

Input:

My family is very important to me.

We do lots of things together.

My father teaches mathematics, and my mother is a nurse at a big hospital.

My brothers are very smart and work hard in school.

My sister is a nervous girl, but she is very kind. My grandmother also lives with us.

Output:

“My”, “family”, “is”, “very”......(so on).

Step 3: Predicting Part Of Speech For Each Token

In this step, we predict whether each token is a noun, verb, pronoun, adjective, adverb, determiner etc. We do this by feeding the tokens to a pre-trained part-of-speech classification model. This model is fed a lot of English words and the corresponding parts of speech they represent, so when in future a similar word is fed to the model, it knows the part of speech it belongs to.

It is important to note that models don’t understand the sense of the words, it just classifies them on the basis of the previous experience.

For example,

Input: Part-of-speech classification model

Output:

'My' - determiner

'Family' - noun

'Is' - verb

Step 4: Lemmatization

In this step, we feed the model with the root word.

For example,

"The duck is eating the bread."

"The ducks are eating the bread."

Here the words duck and ducks mean the same thing and we are talking about the same concept in both cases, however, the computer does not understand that. We need to teach the computer that we are talking about the same thing in both the sentences. Therefore, we find the most basic form or root form or lemma of the word and feed it to the model.

Similarly, we can use it for verbs too, like, ‘Study’ and ‘Studying’ should be considered the same.

Step 5: Identifying Stop Words

Many words in the English language are used more frequently than others like ‘a’, ‘and’, ‘the’ etc. These words add noise while doing the analysis. So, we take these words out.

Some NLP models will categorize these words as stop words and they remove these while doing statistical analysis. The list of stop words can vary depending on what you are expecting to receive as an output.

Step 6 (a): Dependency Parsing

Dependency Parsing simply means finding out the relationship between the words in the sentence and how they are related to each other.In this step, we create a parse tree with root as the main verb. If we talk about the first sentence in our example, then ‘is’ is the main verb and it will be the root of the parse tree. After this we create a parse tree for every sentence taking with one root word, which is the main verb in the sentence. We also find the relationship between two words in each sentence.

Here as well, we train a model to identify the dependency between words by feeding many words to it. Though, the task is not an easy one.

Step 6(b): Finding Noun Phrases

Here, we group the words that represent the same idea.

For example,

“My brothers are very smart and work hard in school. “

Here, we can group the words “smart” and “work hard” as they represent the same person, i.e., the brother. We can use the output of dependency parsing to combine such words.

Step 7: Named Entity Recognition (NER)

Here, the NER maps the words with the real world places. The places that actually exist in the physical world. We can automatically extract the real world places present in the document using NLP.

Step 8: Coreference Resolution

“My sister is a nervous girl, but she is very kind.”

Here, we know that ‘she’ stands for “The sister”, but for a computer, it isn’t possible to understand that both the tokens refer to the same things, because it treats both the sentences as two different things while it’s processing them. Pronouns are used very often in English and it becomes a tough task for a computer to understand that both things mean the same

These are the 8 basic steps that are involved in any NLP pipeline.

Hope now you get a clearer picture how a NLP model works.

Definitely, there is a lot more to NLP, however, we have tried to focus on the basics first.

Conclusion:

Although we have discussed the basics of NLP today, from the definition to a NLP pipeline, there is a lot more to NLP.

I hope this post will help you gain some confidence to dive deeper into the same.

Thanks for stopping by!

References:

- https://en.wikipedia.org/wiki/Natural_language_processing

- https://builtin.com/data-science/introduction-nlp

- https://towardsdatascience.com/introduction-to-natural-language-processing-nlp-323cc007df3d

- https://capacity.com/enterprise-ai/faqs/what-are-the-advantages-of-natural-language-processing-nlp/

- https://www.geeksforgeeks.org/introduction-to-natural-language-processing/