History of Aritificial Intelligence

In this article, you will read about the history of artificial intelligence and how it came into existence

Can Machines Think?- Alan Turing

A long time ago, in a galaxy far, far away…artificial intelligence was a concept that existed only in science fiction. Then, in the 1950s, a group of scientists created an algorithm for machine learning called the Perceptron. This algorithm was able to learn from examples and classify patterns and is considered to be the very first iteration of modern-day artificial intelligence. Since then, A.I. has become more and more advanced and has been used for everything from diagnosing cancer to beating humans at complex board games.

AI is just machines such as computer systems stimulating in human intelligence.

The development of artificial intelligence (AI) is nothing new, but it has rapidly advanced in recent decades. While AI has been around since the 1950s, it wasn’t until the 1980s that it became a mainstream concept. Since then, AI has gone through several waves of advancement and even experienced a “winter period” when interest in it waned for a time. Artificial intelligence has become more advanced than ever before, and its future looks bright.

Roller Coaster of Advancements and Fallbacks

1950s:

Science fiction popularised the concept of artificially intelligent robots throughout the first part of the twentieth century. It started with the "heartless" Tin Man from the Wizard of Oz and continued with the humanoid robot in Metropolis that impersonated Maria. By the 1950s, a generation of scientists, mathematicians, and philosophers had culturally adopted the concept of AI. Alan Turing, a young British polymath who investigated the mathematical possibilities of AI, was one among these individuals.

Why can't machines solve problems and make decisions the same way people do? According to Turing, humans use available information as well as reason to solve problems and make judgments. Computing Machinery and Intelligence, a 1950 study in which he described how to develop intelligent machines and how to assess their intelligence, followed this approach.

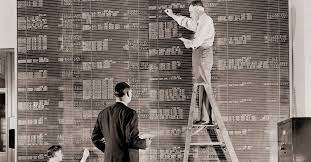

Sadly, words are cheap. What prevented Turing from getting to work right away? First, computers had to undergo a fundamental transformation. Prior to 1949, computers lacked a critical requirement for intelligence: they could only execute commands, not store them. To put it differently, computers could be told what to try to do but couldn't commit it to memory. Second, the computation was prohibitively costly. Only prominent colleges and enormous technology firms could afford to require their time exploring these unexplored waters. To persuade funding sources that machine intelligence was worth pursuing, a symbol of concept also as support from high-profile persons were required.

1960s:

Allen Newell, Cliff Shaw, and Herbert Simon's Logic Theorist provided the proof of concept five years later. The Logic Theorist was a programme developed by the Research and Development (RAND) Corporation to simulate human problem-solving abilities. It was presented at the Dartmouth Summer scientific research on AI (DSRPAI) led by John McCarthy and Marvin Minsky in 1956 and is widely considered the primary artificial intelligence programme.

McCarthy, envisioning an enormous joint effort, convened a historic conference that brought together prominent experts from many fields for an open-ended discussion on AI, a phrase he invented at the occasion. Unfortunately, the conference fell short of McCarthy's expectations; people came and went as they liked, and no standard techniques for the subject were agreed upon. Despite this, everyone was united in their belief that AI could be achieved. The importance of this event cannot be overstated, since it ushered in the following two decades of AI development.

AI grew in popularity from 1957 to 1974. Computers grew quicker, cheaper, and more accessible as they might store more data. Machine learning algorithms developed as well, and individuals became better at determining which method to use for a given task. Early prototypes, such as Newell and Simon's General Problem Solver and Joseph Weizenbaum's ELIZA, showed promise in problem-solving and spoken language interpretation, respectively.

1970s:

"Within three to eight years, we will have a machine with the general intellect of an average human being," Marvin Minsky told Life Magazine in 1970. While the essential proof of principle had been established, there was still a long way to go before the final goals of natural language processing, abstract reasoning, and self-recognition could be realised.

1980s:

Two factors rekindled AI within the 1980s: an extension of the algorithmic toolset and a rise in funding. "Deep learning" techniques popularised by John Hopfield and David Rumelhart allowed computers to learn from experience. Edward Feigenbaum, on the opposite hand, pioneered expert systems that emulated the decision-making process of a person's expert. Non-experts could obtain guidance from the pc after it asked an expert during a field the way to respond during a certain situation. Once this was understood for practically every situation, non-experts could receive advice from the programme. In industries, expert systems were widely employed. The Japanese government heavily funded expert systems and other AI-related endeavours as part of their Fifth Generation Computer Project (FGCP).

Unfortunately, the majority of the lofty objectives were not achieved. However, it may be claimed that the FGCP's indirect impacts spurred a new generation of bright engineers and scientists. Regardless, the FGCP's funding dried up, and AI faded from view.

1990s and ahead:

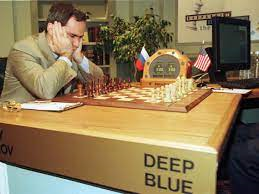

Surprisingly, AI thrived within the absence of state support and public attention. Many of artificial intelligence's significant goals were accomplished throughout the 1990s and 2000s. Gary Kasparov, the reigning world chess champion and grandmaster, was beaten by IBM's Deep Blue, a chess-playing computer programme, in 1997. This widely publicised encounter marked the first time a reigning world chess champion was defeated by a computer, and it marked a significant milestone toward the development of artificially intelligent decision-making software.

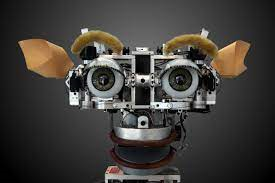

Speech recognition software produced by Dragon Systems was installed on Windows within the same year. This was yet another significant stride forward, but this time in the direction of spoken language interpretation. There didn't appear to be any difficulty that machines couldn't solve. Even human emotion was fair game, as Kismet, a robot designed by Cynthia Breazeal and capable of recognising and displaying emotions, demonstrated.

What kind of future can we expect?

Because of A.I’s slow development, many people think of the future of AI as being something that is far away. But the truth is that A.I. is already here and, with the right guidance, it can be used as a means of furthering human knowledge and improving the way that we live. Yes, I'm talking about self-driving cars, clearing a driver's license test will not be an issue now. It is, however, important to understand the ways in which A.I. can be used to maximize the potential benefits and minimize the potential risks to ensure we can make the most out of the technology’s potential while also ensuring that we don’t create a future where humans are replaced by machines ( We all have seen I, Robot !)

As far as policy is concerned, the government needs to be careful of the potential dangers posed by this new technology. In fact, it is imperative that policymakers set concrete guidelines for the development of AI and ensure that the benefits of A.I. are maximized rather than the risks that it poses to humankind. Additionally, as A.I. becomes more advanced, we must be mindful of the ways in which it will impact our society and ensure its development is in the best interests of the public.

From SIRI to curing cancer, Artificial intelligence soon is going to be everywhere bringing exponential growth around the globe. Impacting your lifestyle and your work immensely.

We will be discussing more this in our next section on how A.I.will be impacting your jobs.

So, stay tuned. See you there!