Guide to using OpenAI Assistant API

Discover how OpenAI's Assistant API can transform your applications with intelligent virtual assistants. Explore tools and integrations that elevate user interactions effortlessly.

Introduction

In the rapidly advancing world of artificial intelligence, OpenAI’s Assistants API offers a robust solution for developers of various kinds working in a wide range of fields. With this API, you can create AI assistants tailored to your application’s needs.

These assistants follow specific instructions and can use various models, tools and files to answer user questions. Currently, the API supports three main tools: Code Interpreter, File Search, and Function Calling, each designed to enhance the assistant’s capabilities and improve user interactions.

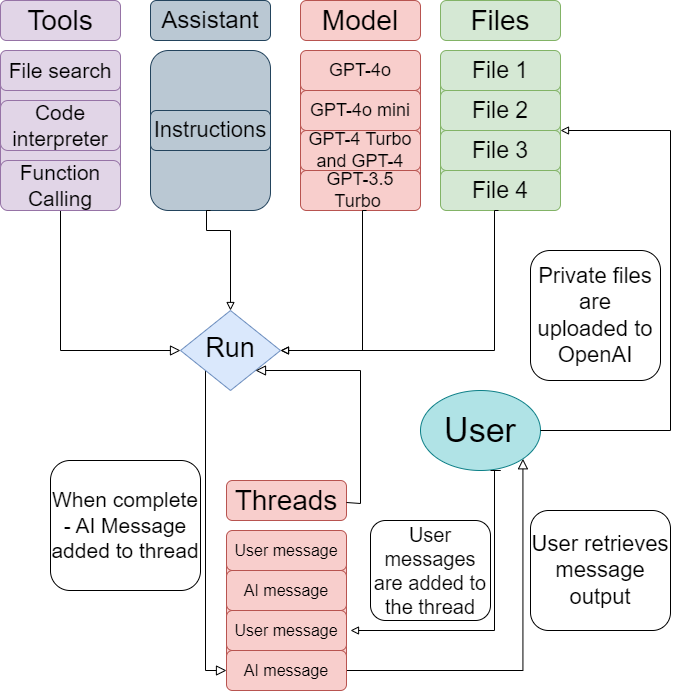

How does the Assistant work?

Let us deep dive into the workflow of Assistant API:

Detailed Workflow:

Components and Their Roles:

- User:

- Initiates Requests: The user initiates interactions by sending specific requests to the assistant. These requests could involve tasks like file processing, code execution, function calls, or data retrieval.

- Uploads Files: The user can upload private files to OpenAI, which are necessary for the assistant to process certain tasks.

- Retrieves Output: After the task is processed, the user retrieves the output generated by the assistant.

- Assistant:

- Instruction Parsing: The assistant parses the user's instructions and determines the required actions. This may involve identifying the appropriate tools and models needed to fulfill the request.

- Task Orchestration: The assistant orchestrates the task execution by coordinating between various tools and models. It ensures that the correct sequence of operations is followed.

- Tools:

- File Search:

- Functionality: Allows the assistant to search and retrieve files based on user queries.

- Use Case: Useful for locating specific documents or datasets required for processing tasks.

- Code Interpreter:

- Functionality: Executes and interprets user-provided code.

- Use Case: Essential for tasks that involve programming, data analysis, or generating computational results.

- Function Calling:

- Functionality: Executes predefined functions necessary for task completion.

- Use Case: Useful for specific operations that need to be performed as part of the user's request, such as data transformation or API calls.

- File Search:

- Model:

- GPT-4o: The most advanced version with the highest capabilities, used for complex and nuanced tasks.

- GPT-4o mini: A lightweight version of GPT-4o, suitable for tasks requiring less computational power.

- GPT-4 Turbo and GPT-4: High-performance models that provide a balance between capability and efficiency.

- GPT-3.5 Turbo: An earlier model, efficient for less complex tasks, providing good performance for routine operations.

- Files:

- Private Files: User-uploaded files stored securely on OpenAI's servers. These files are used as inputs for processing tasks.

- Example files (as shown in the diagram): File 1, File 2, File 3, File 4.

- Private Files: User-uploaded files stored securely on OpenAI's servers. These files are used as inputs for processing tasks.

Workflow Process:

- User request:

- Message Sending: The user sends a detailed message to the assistant. This message specifies the task to be performed, such as "Analyze financial data from the uploaded CSV file" or "Run the provided Python code".

- File Upload: If required, the user uploads files that are necessary for the task.

- Assistant coordination:

- Instruction Analysis: The assistant analyzes the user's instructions to determine the necessary steps.

- Tool and Model Selection: The assistant selects the appropriate tools (File Search, Code Interpreter, Function Calling) and the most suitable model (e.g., GPT-4o for complex tasks, GPT-3.5 Turbo for simpler ones).

- Task Execution: The assistant coordinates the execution of tasks by invoking the selected tools and models in the correct sequence.

- Run:

- Execution Process: The chosen models and tools execute the tasks. For example:

- Code Interpreter: Runs and debugs user-provided code, generating results or output files.

- File Search: Locates and retrieves necessary files for processing.

- Function Calling: Executes specific functions as part of the workflow.

- Data Processing: During execution, data is processed according to the user's instructions. This may involve complex computations, data transformations or generating insights from the data.

- Execution Process: The chosen models and tools execute the tasks. For example:

- Threads:

- Message Sequencing: Each interaction is tracked through threads consisting of user messages and AI responses. This helps maintain context and continuity in the conversation.

- Thread Management: The assistant manages multiple threads, ensuring that each user request is handled independently and efficiently.

- Completion:

- Final Output Generation: Upon task completion, the assistant generates a final output message. This could be the result of code execution, a processed file, or a detailed response to the user's query.

- Result Compilation: The assistant compiles all necessary information and results, ensuring accuracy and completeness before delivering the output.

- User retrieves the output:

- Output Delivery: The user retrieves the final output from the assistant. This could be in the form of a text message, a file download, or any other format specified by the user.

- Result Utilization: The user can then utilize the output for their specific needs, such as further analysis, reporting, or decision-making.

Tools supported by the Assistant API

Assistants created using the Assistants API are equipped with tools that allow us to perform more complex tasks or interact with your application. These provide built-in tools for assistants, but they allow us to also define our own tools to extend the capabilities!

The Assistants API currently supports the following tools:

a. File search:

File Search enhances the Assistant's capabilities by incorporating knowledge beyond its pre-existing model. This includes proprietary product details or documents shared by users. OpenAI takes care of parsing and segmenting your documents, generating and storing embeddings. This dual approach of vector and keyword search allows the Assistant to retrieve the most relevant content effectively, ensuring user queries are answered with precise and comprehensive information.

Imagine having an assistant that doesn't just rely on pre-existing knowledge but can also tap into your specific documents to provide more tailored responses. This tool makes it possible by automatically parsing and breaking down your documents into manageable pieces, creating and storing embeddings that capture the essence of your content. When a user asks a question, the Assistant can use both vector search, which looks for semantic similarities and keyword search, which focuses on exact matches, to find the most pertinent information. This combination ensures that users receive accurate and detailed answers, making the interaction more efficient and satisfying.

Let us experiment on a few files:

Example 1:

Firstly, we shall try it on the CSV file with 20 different stocks having the highest and lowest opening values for the past 5 years, which looks something like this:

The code:

Firstly, let's pip install openai.

pip install openaiimport openai

from openai import OpenAI

import time

OPENAI_API_KEY = 'ENTER_YOUR_API_KEY'

client = OpenAI(api_key=OPENAI_API_KEY)This Python script imports the openai library and sets up an API client for OpenAI using a provided API key. It initializes the OpenAI client with the specified API key, enabling the script to interact with OpenAI's services, such as generating text or retrieving embeddings.

assistant = client.beta.assistants.create(

instructions="You are an expert financial analysis assistant. When given a file with financial data(such as CSV,PDF etc) , answer the questions asked by the user. Only refer to the data provided, do not generate responses on your own ",

model="gpt-4o",

tools=[{"type": "file_search"}],

)We create a new assistant using the OpenAI client, specifying that the assistant should function as an expert in financial analysis. It is instructed to answer user questions based solely on provided financial data files, such as CSV or PDF, without generating independent responses. The assistant is configured to use the "gpt-4o" model and includes a tool for file search to retrieve relevant information from the provided documents.

from google.colab import files

import io

import pandas as pd

uploaded= files.upload()

filename = next(iter(uploaded))

file_stream = io.BytesIO(uploaded[filename])

file_stream.name = filename

vector_store = client.beta.vector_stores.create(name = "fin analysis")

file_batch = client.beta.vector_stores.file_batches.upload_and_poll(

vector_store_id = vector_store.id, files = [file_stream]

)

print(file_batch.status)

print(file_batch.file_counts)This code uploads a file and prepares it for analysis using OpenAI's vector store. It imports necessary libraries, uploads a file and retrieves its filename. The file is then converted into a stream and assigned a name. A new vector store named "fin analysis" is created using the OpenAI client. The uploaded file is then processed and uploaded in batches to the vector store and the status and file counts of the batch upload are printed to confirm the operation's success.

Output:

assistant = client.beta.assistants.update(

assistant_id = assistant.id,

tool_resources={"file_search": {"vector_store_ids": [vector_store.id]}}

)We now update the previously created financial analysis assistant by linking it to a specific vector store using its ID. The assistant is configured to use the "file_search" tool, which now includes access to the vector store containing the uploaded financial data, enabling the assistant to retrieve and utilize this data to answer user queries accurately.

thread= client.beta.threads.create(

messages=[

{

"role": "user",

"content": "Which company had the highest open stock value in 2021, what was the value in dollars?"

}

]

)Next, this code initiates a new conversation thread with the OpenAI assistant, starting with a user's query. The query from the user asks which company had the highest open stock value in 2021 and what that value was in dollars. This thread will facilitate a dialogue with the assistant to retrieve and provide the requested financial information based on the data available in the vector store.

from openai import AssistantEventHandler

from typing_extensions import override

class EventHandler(AssistantEventHandler):

@override

def on_text_created(self, text) -> None:

print(f"\n Assistant > ", end="",flush=True)

@override

def on_tool_call_created(self,tool_call):

print(f"\n Assistant > {tool_call.type}\n",flush=True)

@override

def on_message_done(self, message) -> None:

message_content = message.content[0].text

print(message_content.value)

with client.beta.threads.runs.stream(

thread_id = thread.id,

assistant_id = assistant.id,

event_handler = EventHandler(),

) as stream:

stream.until_done()

Finally, we define a custom EventHandler class for handling events from an OpenAI assistant. It overrides methods to print specific information when text is created, a tool call is made or a message is completed.

It then uses this event handler to stream real-time updates from an ongoing conversation thread, processing and displaying the assistant's responses and tool activities as they occur.

Output:

Example 2:

We shall now try the 10-k report of Apple for the year 2023:

The code:

thread= client.beta.threads.create(

messages=[

{

"role": "user",

"content": "What are the products and services provided by Apple?"

}

]

)Output:

b. Code interpreter:

The Code Interpreter is a powerful feature that enables Assistants to execute Python code within a secure, sandboxed environment. This tool is designed to handle files of various formats and data types, allowing it to process and analyze information efficiently. It can also create and save files containing both data and visualizations, such as graphs. With Code Interpreter, the Assistant is capable of tackling complex coding and mathematical problems through iterative execution. If the initial code fails to run, the Assistant can adjust and reattempt different versions of the code, refining its approach until successful execution is achieved.

We shall now look at a few examples:

Example 1:

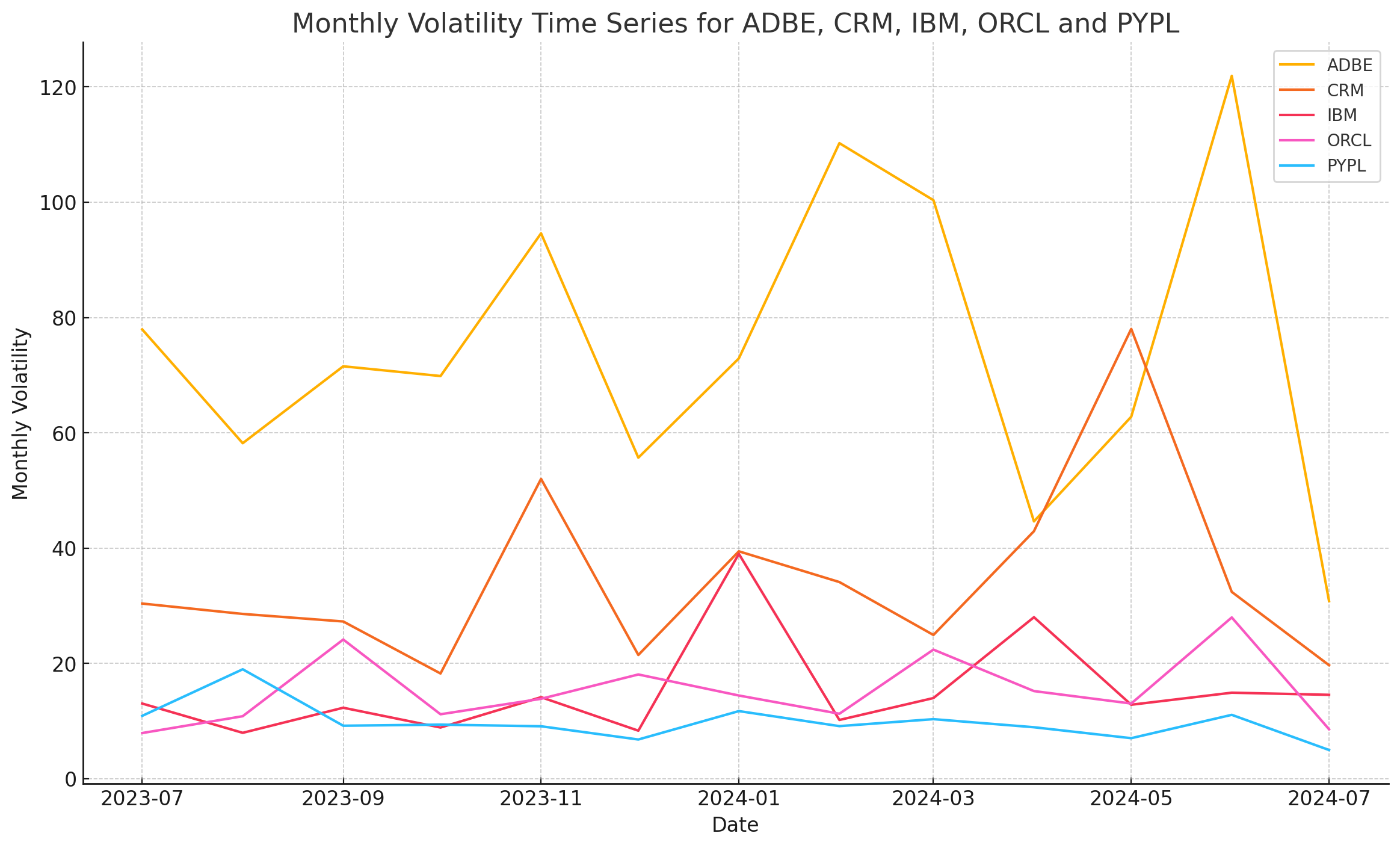

We'll be using the CSV file having the monthly volatility for Adobe, Salesforce, IBM, Oracle and Paypal for the past year.

Code:

Similar to the previous module, we'll install openai and enter your API key.

pip install openaifrom openai import OpenAI

OPENAI_API_KEY = 'ENTER_YOUR_API_KEY'

client = OpenAI(api_key=OPENAI_API_KEY)#Run this ones to upload a file

file = client.files.create(

file=open("/content/File_name.csv", "rb"),

purpose='assistants'

)

file_list = client.files.list()

file_id = file_list.data[0].id

print(file_id)

This code uploads a CSV file to the OpenAI platform for use with Assistants. It creates a file resource by opening and reading the specified file, then lists all files to retrieve and print the ID of the newly uploaded file, which is the first item in the list. This file ID can be used for further operations with the uploaded file.

Output:

# Create the assistant

assistant = client.beta.assistants.create(

instructions="You are a data analysis assistant. When given a CSV file with monthly volatility for 5 different companies, generate basic time series statistics using Pandas and plot the time series data.",

model="gpt-4o",

tools=[{"type": "code_interpreter"}],

tool_resources={

"code_interpreter": {

"file_ids": [file.id]

}

}

)

print(assistant)The above code creates a new assistant designed for data analysis tasks. The assistant is instructed to generate basic time series statistics and plot data from a CSV file that includes the monthly volatility for 5 different companies. It utilizes the "gpt-4o" model and incorporates the Code Interpreter tool to process the CSV file. The file.id of the previously uploaded file is linked to the assistant, enabling it to access and analyze the data for generating the requested statistics and visualizations.

Output:

thread = client.beta.threads.create()

print(thread)

message = client.beta.threads.messages.create(

thread_id = thread.id,

role = 'user',

content='Generate me basic time series statistics using Pandas and plot the time series data. provide a link to download the image. provide me a link to download the script as well.'

)

print(message)We create a new conversation thread and then sends a user message within that thread, requesting the generation of basic time series statistics and plots using Pandas. The message also asks for download links to the generated image and script. The thread and message details are printed to confirm their creation and to facilitate further interactions with the assistant.

Output:

run = client.beta.threads.runs.create(

thread_id = thread.id,

assistant_id = assistant.id,

)

run = client.beta.threads.runs.retrieve(

thread_id = thread.id,

run_id = run.id

)

while run.status not in ["completed", "failed"]:

run = client.beta.threads.runs.retrieve(

thread_id = thread.id,

run_id = run.id

)

print(run.status)

time.sleep(10)Next, we continue to initiate a run for the previously created conversation thread using the specified assistant and then continuously monitor the run's status. It retrieves the run status periodically, every 10 seconds, until it reaches a "completed" or "failed" state. The current status is printed during each check, allowing you to track the progress of the assistant's task.

Output:

messages = client.beta.threads.messages.list(

thread_id = thread.id,

)

for message in messages:

print("-" * 50)

# Print the role of the sender

print(f"Role: {message.role}")

# Process each content item in the message

for content in message.content:

# Check if the content is text

if content.type == 'text':

print(content.text.value)

# Check and print details about annotations if they exist

if content.text.annotations:

for annotation in content.text.annotations:

print(f"Annotation Text: {annotation.text}")

print(f"File_Id: {annotation.file_path.file_id}")

annotation_data = client.files.content(annotation.file_path.file_id)

annotation_data_bytes = annotation_data.read()

#file_extension = annotation.text.split('.')[-1]

filename = annotation.text.split('/')[-1]

with open(f"{filename}", "wb") as file:

file.write(annotation_data_bytes)

# Check if the content is an image file and print its file ID and name

elif content.type == 'image_file':

print(f"Image File ID: {content.image_file.file_id}")

print("-" * 50)

print('\n')

Finally, this code retrieves and processes all messages from a specified conversation thread. It prints the role of each message sender and checks the content of each message. If the content is text, it prints the text and any annotations, downloading and saving annotated files locally. If the content is an image file, it prints the image file ID.

Output:

The image and the script would be generated in your folder.

Image created by the interpreter:

Code generated as a python script:

import pandas as pd

import matplotlib.pyplot as plt

# Load the CSV file

file_path = 'your_file_path.csv'

data = pd.read_csv(file_path)

# Convert year and month to a datetime

data['date'] = pd.to_datetime(data[['year', 'month']].assign(day=1))

# Drop NaN values

cleaned_data = data.dropna()

# Pivot the data to have tickers as columns and dates as index

pivot_data = cleaned_data.pivot(index='date', columns='ticker', values='monthly_volatility')

# Drop NA values if any

pivot_data.dropna(inplace=True)

# Generate basic statistics

basic_stats = pivot_data.describe()

# Plot the time series data

plt.figure(figsize=(14, 8))

for column in pivot_data.columns:

plt.plot(pivot_data.index, pivot_data[column], label=column)

plt.title('Monthly Volatility Time Series for ADBE, CRM, IBM, ORCL and PYPL')

plt.xlabel('Date')

plt.ylabel('Monthly Volatility')

plt.legend()

plt.grid(True)

plt.savefig('timeseries_plot.png', bbox_inches='tight')

print(basic_stats)

c. Function calling:

- The function calling feature for OpenAI's GPT-4 involves a streamlined workflow to enhance the model's capabilities by integrating external functions. Initially, the application sends predefined function definitions and the user's prompt to the OpenAI API, which packages these into a system prompt and forwards it to GPT-4.

- GPT-4 analyzes the prompt, determines which function to call, and specifies the necessary parameters. This function name and its parameters are then sent back to the application via the API.

- Next, the application invokes the specified function with the provided parameters, and the function executes, returning the results to the application. The application updates the message history with these results and sends this enriched history back to the API, which forwards it to GPT-4.

- Using this updated context, GPT-4 generates a final, informed response to the user's original prompt. This response is sent back through the API to the application and ultimately delivered to the user, closing the loop. This process allows GPT-4 to handle more complex queries by leveraging real-time data and external computations.

Refer to the following dataset for reference where is data for popular stocks for the last 3 years:

Code:

import time

from datetime import datetime, timedelta

from openai import OpenAI

from openai import AssistantEventHandler

from typing_extensions import override

from IPython.display import display, Markdown, clear_output

import pandas as pd

OPENAI_API_KEY = 'ENTER_YOUR_API_KEY'

client = OpenAI(api_key=OPENAI_API_KEY)Firstly, we shall set up the environment for using OpenAI's API with Python. We import necessary libraries and modules, including time, datetime, pandas and OpenAI-related components. We initialize the OpenAI client using an API key, allowing for interactions with OpenAI's services. Additionally, we prepare to use IPython for displaying markdown content and handling outputs in a notebook environment.

import json

import pandas as pd

from IPython.display import display, clear_output, Markdown

class EventHandler(AssistantEventHandler):

"""Custom event handler for processing assistant events."""

def __init__(self):

super().__init__()

self.results = [] # Initialize an empty list to store the results

@override

def on_event(self, event):

# Retrieve events that are denoted with 'requires_action'

# since these will have our tool_calls

if event.event == 'thread.run.requires_action':

run_id = event.data.id # Retrieve the run ID from the event data

self.handle_requires_action(event.data, run_id)

def handle_requires_action(self, data, run_id):

tool_outputs = []

# Add a debug print to inspect the structure of data.required_action.submit_tool_outputs.tool_calls

# and the format of its elements

print("Inspecting tool calls:")

print(data.required_action.submit_tool_outputs.tool_calls)

for tool in data.required_action.submit_tool_outputs.tool_calls:

# Add a debug print to inspect the tool structure

print(f"Inspecting tool: {tool}")

# Assuming arguments are stored as JSON strings

arguments = json.loads(tool.function.arguments)

if tool.function.name == "get_stock_price_stats":

stock_symbol = arguments.get('stock_symbol')

date = arguments.get('date')

output = self.get_stock_price_stats(stock_symbol, date)

tool_outputs.append({"tool_call_id": tool.id, "output": output})

elif tool.function.name == "calculate_average_price":

stock_symbol = arguments.get('stock_symbol')

start_date = arguments.get('start_date')

end_date = arguments.get('end_date')

output = self.calculate_average_price(stock_symbol, start_date, end_date)

tool_outputs.append({"tool_call_id": tool.id, "output": output})

# Submit all tool_outputs at the same time

self.submit_tool_outputs(tool_outputs, run_id)

def submit_tool_outputs(self, tool_outputs, run_id):

# Use the submit_tool_outputs_stream helper

with client.beta.threads.runs.submit_tool_outputs_stream(

thread_id=self.current_run.thread_id,

run_id=self.current_run.id,

tool_outputs=tool_outputs,

event_handler=EventHandler(),

) as stream:

for text in stream.text_deltas:

print(text, end="", flush=True)

@override

def on_text_delta(self, delta, snapshot):

"""Handle the event when there is a text delta (partial text)."""

# Append the delta value (partial text) to the results list

self.results.append(delta.value)

# Call the method to update the Jupyter Notebook cell

self.update_output()

def update_output(self):

"""Update the Jupyter Notebook cell with the current markdown content."""

# Clear the current output in the Jupyter Notebook cell

clear_output(wait=True)

# Join all the text fragments stored in results to form the complete markdown content

markdown_content = "".join(self.results)

# Display the markdown content in the Jupyter Notebook cell

display(Markdown(markdown_content))

def get_stock_price_stats(self, stock_symbol, date):

"""Retrieve stock price statistics for a specific date."""

data = financial_data[(financial_data['stock_symbol'] == stock_symbol) & (financial_data['date'] == date)]

if data.empty:

return f"No data available for {stock_symbol} on {date}."

stats = data.iloc[0]

return f"Stock: {stock_symbol}\nDate: {date}\nOpen: ${stats['open_price']:.2f}\nHigh: ${stats['high_price']:.2f}\nLow: ${stats['low_price']:.2f}\nClose: ${stats['close_price']:.2f}\nVolume: {stats['volume']}"

def calculate_average_price(self, stock_symbol, start_date, end_date):

"""Calculate the average closing price over a date range."""

data = financial_data[(financial_data['stock_symbol'] == stock_symbol) &

(financial_data['date'] >= start_date) &

(financial_data['date'] <= end_date)]

if data.empty:

return f"No data available for {stock_symbol} from {start_date} to {end_date}."

average_price = data['close_price'].mean()

return f"The average closing price for {stock_symbol} from {start_date} to {end_date} is ${average_price:.2f}."

This code defines a custom EventHandler class that extends AssistantEventHandler to manage events in a data processing workflow. It overrides the on_event method to handle events flagged with thread.run.requires_action, processing tool calls based on their function names and arguments. The handle_requires_action method parses JSON arguments from the tool calls, executes specific functions like retrieving stock price statistics or calculating average prices, and collects the results. It then submits these results using the submit_tool_outputs method.

The on_text_delta method captures partial text updates and updates the Jupyter Notebook cell dynamically. Additional methods include get_stock_price_stats and calculate_average_price to query and compute data from a financial_data dataset.

assistant = client.beta.assistants.create(

instructions="You are a financial data assistant. Use the provided functions to answer questions about stock prices.",

model="gpt-4o",

tools=[

{

"type": "function",

"function": {

"name": "get_stock_price_stats",

"description": "Get stock price statistics for a specific date",

"parameters": {

"type": "object",

"properties": {

"stock_symbol": {

"type": "string",

"description": "The stock symbol for the company"

},

"date": {

"type": "string",

"description": "The date for the statistics in YYYY-MM-DD format"

}

},

"required": ["stock_symbol", "date"]

}

}

},

{

"type": "function",

"function": {

"name": "calculate_average_price",

"description": "Calculate the average closing price for a stock over a date range",

"parameters": {

"type": "object",

"properties": {

"stock_symbol": {

"type": "string",

"description": "The stock symbol for the company"

},

"start_date": {

"type": "string",

"description": "The start date for the analysis in YYYY-MM-DD format"

},

"end_date": {

"type": "string",

"description": "The end date for the analysis in YYYY-MM-DD format"

}

},

"required": ["stock_symbol", "start_date", "end_date"]

}

}

}

]

)This section creates a financial data assistant using the OpenAI API with the "gpt-4o" model. It configures the assistant to use two specific functions: get_stock_price_stats, which retrieves stock price statistics for a given date, and calculate_average_price, which calculates the average closing price for a stock over a specified date range. Each function is defined with its parameters and descriptions, ensuring the assistant can accurately process and respond to related queries about stock prices.

thread = client.beta.threads.create()

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="Provide the stock price statistics for AAPL on 30 August 2021 and calculate the average price from 2023-01-01 to 2023-06-30.",

)

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=assistant.id,

event_handler=EventHandler()

) as stream:

stream.until_done()Finally, we initiate a new conversation thread with the OpenAI assistant, sending a user message that requests stock price statistics for AAPL on August 30, 2021, and the average price from January 1, 2023, to June 30, 2023. It then streams the assistant's responses in real-time.

Output:

Example 2:

thread = client.beta.threads.create()

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="How has NVIDIA(NVDA) stock performed in the last year?",

)

with client.beta.threads.runs.stream(

thread_id=thread.id,

assistant_id=assistant.id,

event_handler=EventHandler()

) as stream:

stream.until_done()Output:

Conclusion

The OpenAI Assistant API represents a significant leap forward in integrating advanced AI into applications. By offering a flexible and powerful suite of tools, it empowers developers to create highly responsive and intelligent virtual assistants.

Embracing this technology can transform user interactions, streamline processes, and unlock new possibilities for innovation. As AI continues to evolve, mastering the Assistant API positions you at the forefront of this exciting frontier, ready to deliver exceptional and personalized experiences.

For a powerful AI companion that can help you delve into company insights, explore dafinchi.ai to discover more.